Difference Between Concurrent Computing and Parallel Computing

In many fields, the words concurrent and parallel are used synonymously, but not so in programming, where it describes fundamentally different concepts.

What is Concurrent Computing?

Concurrent Computing is the concurrent (simultaneous) execution of multiple computational tasks in overlapping time periods instead of sequentially. It is the ability of a system to perform several calculations simultaneously or within overlapping time frames. Concurrency is essentially applicable when you are talking about more than one task at the same time. These tasks may be implemented as separate programs, or as a set of processes or threads created by a single program. The tasks may be executed on a single processor, multiple processors, or distributed across a network.

Concurrent computing is related to parallel computing, but focuses more on the interactions between tasks. Concurrency refers to the execution of multiple tasks at the same time but does not necessarily mean simultaneously. Whether the tasks accomplished at the same time or not is an implementation detail. The task can execute on a single processor through interrupted execution or on multiple physical processors. A common example of concurrency is a program to calculate the sum of a large list of numbers.

What is Parallel Computing?

Parallel Computing is the process of running multiple computational tasks simultaneously by delegating different parts of the computation to different processors that execute at the same time. A parallel program uses several processor cores to perform a computation more quickly. It physically runs parts of tasks or multiple tasks at the same time using multiple processors. It enables single sequential CPUs to do lot of things seemingly simultaneously. Parallelism is a realization of a concurrent program.

In a multi-core system, multiple programs can actually make progress at the same time without the aid of the operating system to provide time slicing. If you run, let’s say two processes on a dual-core system and allocate one core per process, they will both execute at the same time. This is what you can call executing in parallel. Parallel computing essentially requires hardware with multiple processing units. It is the simultaneous execution of computations, possibly related but not necessarily.

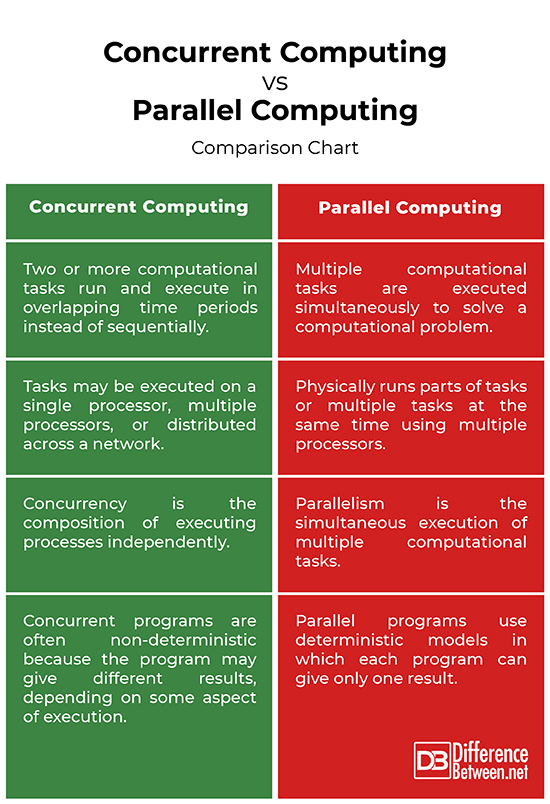

Difference between Concurrent Computing and Parallel Computing

Definition

– Concurrent computing is a form of computing in which two or more computational tasks run and execute in overlapping time periods instead of sequentially. Concurrency is essentially applicable when you are talking about more than one task at the same time. Parallel computing, on the other hand, is a type of computing architecture in which multiple compute resources are used simultaneously to solve a computational problem.

Execution

– In concurrent computing, the tasks may be executed on a single processor, multiple processors, or distributed across a network. The task can execute on a single processor through interleaved execution or on multiple physical processors. A parallel program uses several processor cores to perform a computation more quickly. It physically runs parts of tasks or multiple tasks at the same time using multiple processors. Concurrency refers to execution of multiple tasks at the same time but not necessarily simultaneously.

Processing Power

– Concurrency is a program-structuring process in that there are multiple threads of control. Conceptually, these threads of control execute at the same time; that is, you can see their effects interspersed. A parallel program is the one that uses several processor cores to perform a computation more quickly. The aim is to delegate different parts of the computation to different processors that execute at the same time.

Model

– Concurrent programs are often non-deterministic in nature which means they tend to give different results based on the precise timing of events. A concurrent program can run differently on different runs because they must act together with external agents that trigger events at unpredictable times. Parallel programs use deterministic model because the goal is to get the answer more swiftly. A deterministic model means each program can give only one result all the time.

Concurrent Computing vs. Parallel Computing: Comparison Chart

Summary

In a nutshell, concurrent computing means a program or task can support multiple computations at the same time, but not necessarily simultaneously. It is the process of performing computations independently. Parallel computing, on the other hand, refers to the simultaneous execution of two or more computations on different processors. You can say that all parallel computing is concurrent, but not the other way around. Parallel computing is not possible with single CPU; instead, it requires multi-core setup.

Is Parallel Computing concurrent?

Parallel computing refers to the simultaneous execution of concurrent tasks on different processors. So, all parallel programming is concurrent, but not the other way around.

What is the difference between concurrent and simultaneous?

Both the words mean “at the same time” and are almost interchangeable, but concurrent implies coordination while simultaneous simply means at the same time. Concurrent is used more broadly to indicate two events that overlap in some way, such as happening within the same time frame but not exactly simultaneously.

Is Async concurrent?

Async is a programming model while concurrent is a way tasks are executed. Asynchronous operations are often termed concurrent, only when they share resources. In async, you never know which tasks will run first. So, there is no concurrency here.

- Difference Between Caucus and Primary - June 18, 2024

- Difference Between PPO and POS - May 30, 2024

- Difference Between RFID and NFC - May 28, 2024

Search DifferenceBetween.net :

Leave a Response

References :

[0]Marlow, Simon. Parallel and Concurrent Programming in Haskell: Techniques for Multicore and Multithreaded Programming. California, United States: O'Reilly Media, 2013. Print

[1]Prasad, Sushil, et al. Topics in Parallel and Distributed Computing: Introducing Concurrency in Undergraduate Courses. Massachusetts, United States: Morgan Kaufmann Publishers, 2015. Print

[2]Sottile, Matthew J., et al. Introduction to Concurrency in Programming Languages. Florida, United States: CRC Press, 2009. Print