Difference Between Emotion Recognition in AI and Humans

Emotions matter. They are at the very core of human experience and existence. Emotions define us as humans as to who we are as our body and intellect. They shape our lives in the profoundest of ways and help us determine what is worthy of our attention. They play a key part in the way we see, perceive, understand and reason about people and things around us. It is our emotions that drive us, motivate us and propel us, regardless of how logical, reasonable and rational we think we are or can be. But what happens when machines start to interpret feelings, emotions, moods and attention just the way humans do.

This is being made possible by a relatively new branch of artificial intelligence (AI) called “Emotion AI” or “Artificial Emotional Intelligence”. This is a powerful and remarkable technology that intends to replicate human emotions in machines order in to make them simulate, understand and react to human emotions. It’s also known as affective computing. This is done through capturing emotions. In computer science, ‘capture’ simply means storing data in a computer. Well, the idea is to develop Emotion AI that is able to detect human emotions exactly the way humans do. So what is it and how does that exactly work in contrast with actual emotional recognition in humans? Let’s take a look.

Artificial Emotion Recognition

Artificial emotional intelligence, also known as Emotion AI, is a relatively new branch of artificial intelligence known as ‘affective computing’ that intends to replicate human emotions in machines just the way humans do. It integrates artificial intelligence, computer science, cognitive science, robotics, psychology, biometrics and much more in order to allow us to communicate and interact with machines via our emotions or feelings. This is done by capturing emotions. Interestingly, digital assistants in our homes and on our smartphones are able to understand not only what we say, but also how we say it. Even emotional responses that can be measured also tell analysts a great deal about what captures our attention. Emotion recognition is the science of identifying human emotions, typically from facial expressions, gesture, body language, and tone.

Emotion Recognition in Humans

Human emotions are universal meaning humans show a consistent pattern in recognizing emotions and at the same time, show some variability between individuals. This is called emotional intelligence. It involves a set of skills that defines how effectively we perceive, understand, use and interpret our very own and others’ feelings and emotions. Emotional intelligence is the most important factor which tells us how well we get on with others professionally as well as personally. Humans use multiple non-verbal cues, such as facial expressions, body language, gestures, and voice tone, to express their emotions. Humans, in fact, have a natural way of understanding and interpreting emotions, in contrast to artificial emotional intelligence which captures emotions via several technologies to replicate human emotions.

Difference between Emotional Recognition in AI and Humans

Definition

– Emotion recognition is the art and science of identifying human emotions. Humans show a consistent pattern in recognizing emotions and at the same time, show some variability between individuals. People vary widely at identifying emotions of others in terms of accuracy. The use of technology to capture and replicate human emotions, however, is a relatively new research area of computer science called ‘artificial emotional intelligence’ or ‘affective computing.’

Phenomena

– Emotion recognition in humans is a natural phenomenon wherein they use multiple non-verbal cues, such as facial expressions, body language, gestures, and voice tone, to express their emotions. However, humans might have different cognitive responses to the same situation, which means their thoughts might differ. Artificial emotional recognition, on the other hand, involves recognition, interpretation, and replication of human emotions by computers and machines. There are basically two approaches to automatic emotion recognition: knowledge-based techniques and statistical methods.

Approach

– Artificial emotional intelligence is achieved by the capacity to see, read, listen, understand and learn about emotional life of humans. This involves interpreting words and images, seeing and sensing facial expressions, gaze directions, gestures, body language, and voice tone. It also involves machines being able to feel our heart rate, body temperature, fitness level, and respiration, among other bodily behaviors. This makes them increasingly capable of gauging human behavior. Humans have a natural way of understanding and interpreting emotions.

Applications

– There are several real world examples of artificial emotional intelligence that we are witnessing on daily basis such as rooms that alter lighting and music based on our moods, our very own digital assistants, toys that engage young minds with natural emotional responses, automatic tutoring systems, etc. Human emotional intelligence helps fix the gaps that exist in artificial emotional intelligence. Emotion recognition in humans is based on the visual experience of facial expressions.

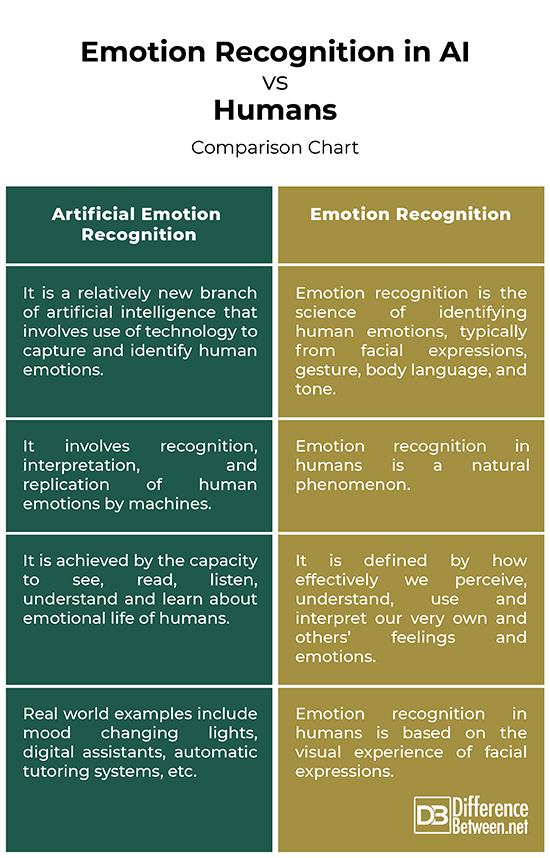

Emotion Recognition in AI vs. Humans: Comparison Chart

Summary of Emotion Recognition in AI vs. Humans

Emotion recognition in humans is a natural phenomenon wherein they use multiple non-verbal cues, such as facial expressions, body language, gestures, and voice tone, to express their emotions. Emotional intelligence is the most important factor which tells us how well we get on with others professionally as well as personally. However, we are on the cusp of a new era where machines start to interpret feelings, emotions, moods and attention just the way humans do. This is being made possible by a relatively new branch of artificial intelligence (AI) called “Artificial Emotional Intelligence” or “Affective Computing.” It involves recognition, interpretation, and replication of human emotions by computers and machines.

- Difference Between Caucus and Primary - June 18, 2024

- Difference Between PPO and POS - May 30, 2024

- Difference Between RFID and NFC - May 28, 2024

Search DifferenceBetween.net :

Leave a Response

References :

[0]Image credit: https://p1.pxfuel.com/preview/144/209/141/galaxy-face-universe-star-space-fantasy.jpg

[1]Image credit: https://live.staticflickr.com/5675/23555336780_f63a46c5fb_z.jpg

[2]Yonck, Richard. Heart of the Machine: Our Future in a World of Artificial Emotional Intelligence. New York, United States: Skyhorse, 2017. Print

[3]McStay, Andrew. Emotional AI: The Rise of Empathic Media. Thousand Oaks, California: SAGE Publications, 2018. Print

[4]Hasson, Gill. Understanding Emotional Intelligence. United Kingdom: Pearson UK, 2015. Print

[5]Konar, Amit and Aruna Chakraborty. Emotion Recognition: A Pattern Analysis Approach. Hoboken, New Jersey: John Wiley & Sons, 2015. Print