Difference Between GPU and FPGA

Rapid advances in VLSI technology over the past few decades have enabled fabrication of billions of transistors on a single chip. This technological advancement has led to the design and development of much faster and energy-efficient hardware. The rapidly increasing clock rates and higher memory bandwidths resulted in improved performance. And the improvement in the single-core performance of general purpose processors has diminished owing to the decreased rate of increase of operating frequencies. The two main reasons for that is the increasing gap between processor and memory speeds, and the limitations in the power supply. To solve these issues, the microprocessor industry shifted to multi-core processors. Other viable alternatives came to the picture in addition to multi-core processors to overcome such bottlenecks, including custom-designed ICs and reprogrammable FPGAs, and GPUs as well. So, what would you prefer for your computation requirements – GPUs or FPGAs?

What is GPU?

Graphics Processing Unit (GPU), more commonly known as a graphics card or a video card, is a graphics processor for handling graphic information to output on a display. GPU is a specialized processor initially designed to serve the need for accelerating graphics rendering, mainly for boosting the graphics performance of games on a computer. In fact, most consumer GPUs are dedicated to achieve superior graphics performance and visuals to enable life-like gameplay. But today’s GPUs are much more than the personal computers in which they first appeared.

Before the advent of GPUs, general purpose computing, as we know it, was only possible with CPUs, which were the first mainstream processing units manufactured for both consumer uses and for advanced computing. The GPU computing has dramatically evolved over the last few decades to have found extensive use in the research surrounding machine learning, AI and deep learning. GPU has gone a level up with the introduction of GPU APIs such as Compute Unified Device Architecture (CUDA), which paved the way to the development of libraries for deep neural networks.

What is FPGA?

Field Programmable Gate Array (FPGA) is an entirely different beast that took the GPU computing performance to a whole new level, offering superior performance in deep neural networks (DNNs) applications while demonstrating improved power consumption. FPGAs were initially used to connect electronic components together, such as bus controllers or processors, but over time, their application landscape has transformed dramatically. FPGAs are semiconductor devices that can be electronically programmed to become any kind of digital circuit or system. FPGAs offer better flexibility and rapid prototyping capabilities compared to custom designs. San Jose, California based Altera Corporation is one of the largest producers of FPGAs and in 2015, the company was acquired by Intel. These are very different than the instruction-based hardware, such as GPUs and the best part is that they can be reconfigured to match the requirements of more data-intensive workloads, such as machine learning applications.

Difference between GPU and FPGA

Technology

– GPU is a specialized, electronic circuit initially designed to serve the need for accelerating graphics rendering for general purpose scientific and engineering computing. GPUs are designed to operate in single instruction multiple data (SIMD) fashion. GPU offloads some of the power-hungry portions of the code by accelerating performance of applications running on the CPU. FPGAs, on the other hand, are semiconductor devices that can be electronically programmed to become any kind of digital circuit or system you want.

Latency

– FPGAs offer lower latency than GPUs which means they are optimized to process applications as soon as the input is given with minimal delay. The architecture of the FPGA allows it to achieve high computational power without the complex design process, making it ideal for the lowest latency applications. They achieve significantly higher compute capability in less time possible compared to GPUs, which relatively needs to evolve to stay relevant.

Power Efficiency

– Energy efficiency has been an important performance metric for years and FPGAs excel in that too because they are known for their power efficiency. They can support very high rates of data throughput in regards to parallel processing in circuits implemented in the reconfigurable fabric. The best thing about FPGAs is that it can be reconfigured which offers a flexibility that gives them an edge over their GPU counterparts for certain application domains. Many of the widely used data operations can be efficiently implemented on FPGAs through hardware programmability. GPUs are also power efficient but only for SIMD streams.

Floating Point Operations

– Many high performance computing applications, such as deep learning, require a strong dependency on floating point operations. Although, the flexible architecture of FPGAs demonstrate superb potential in sparse networks, which are one of the hottest topics in ML applications, they suffer to achieve higher speeds for applications that make extensive use of floating point arithmetic operations. Floating point operations are something GPUs are really good at. The fastest GPU has a floating point performance of maximum 15 TFLOPS.

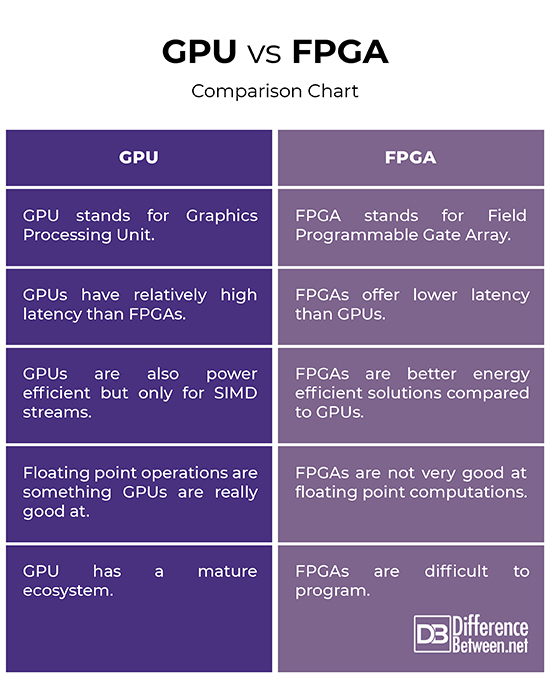

GPU vs. FPGA: Comparison Chart

Summary

In a nutshell, GPUs allows a flexible development environment and faster turnaround times, but FPGAs offer much better flexibility and rapid prototyping capabilities. While GPUs are best when it comes to high performance computing applications that depend on floating point arithmetic operations, FPGAs are great for power-hungry applications, and their latency is much more deterministic because they are specialized processors that can be electronically reconfigured to become any kind of digital circuit or system. In some application areas, FPGAs are very hard to beat, such as military applications like missile guidance systems, which require low latency.

- Difference Between Caucus and Primary - June 18, 2024

- Difference Between PPO and POS - May 30, 2024

- Difference Between RFID and NFC - May 28, 2024

Search DifferenceBetween.net :

Leave a Response

References :

[0]Khatri, Sunil P. and Kanupriya Gulati. Hardware Acceleration of EDA Algorithms: Custom ICs, FPGAs and GPUs. Berlin, Germany: Springer, 2010. Print

[1]Bandyopadhyay, Avimanyu. Hands-On GPU Computing with Python: Explore the Capabilities of GPUs for Solving High Performance Computational Problems. Birmingham, United Kingdom: Packt Publishing, 2019. Print

[2]Antonik, Piotr. Application of FPGA to Real‐Time Machine Learning: Hardware Reservoir Computers and Software Image Processing. Berlin, Germany: Springer, 2018. Print

[3]Kuon, Ian et al. FPGA Architecture: Survey and Challenges. Massachusetts, United States: now Publishers Inc., 2008. Print

[4]Romano, David. Make: FPGAs: Turning Software into Hardware with Eight Fun and Easy DIY Projects. California, United States: Maker Media, Inc., 2016. Print

[5]Image credit: https://commons.wikimedia.org/wiki/File:Icezum_Alhambra_Open_FPGA_electronic_board.png

[6]Image credit: https://pixabay.com/photos/nvidia-gpu-electronics-pcb-board-1201077/