Difference Between MapReduce and Spark

Apache Spark is one of the most active open-source projects in the Hadoop ecosystem and one of the hottest technologies in big data analysis today. Both MapReduce and Spark are open source frameworks for big data processing. However, Spark is known for in-memory processing and is ideal for instances where data fits in the memory, particularly on dedicated clusters. We compare the two leading software frameworks to help you decide which one’s right for you.

What is Hadoop MapReduce?

MapReduce is a programming model within the Hadoop framework for distributed computing based on Java. It is used to access big data in the Hadoop File System (HDFS). It is a way of structuring your computation that allows it to easily be run on lots of machines. It enables massive scalability across hundreds or thousands of servers in a Hadoop cluster. It allows writing distributed, scalable jobs with little effort. It serves two essential functions: it filters and distributes work to various nodes within the cluster or map. It is used for large scale data analysis using multiple machines in the cluster. A MapReduce framework is typically a three-step process: Map, Shuffle and Reduce.

What is Apache Spark?

Spark is an open source, super fast big data framework widely considered the successor to the MapReduce framework for processing big data. Spark is a Hadoop enhancement to MapReduce used for big data workloads. For an organization that has massive amounts of data to analyze, Spark offers a fast and easy way to analyze that data across an entire cluster of computers. It is a multi-language unified analytics engine for big data and machine learning. Its unified programming model makes it the best choice for developers building data-rich analytic applications. It started in 2009 as a research project at UC Berkley’s AMPLab, a collaborative effort involving students, researchers and faculty.

Difference between MapReduce and Spark

Data Processing

– Hadoop processes data in batches and MapReduce operates in sequential steps by reading data from the cluster and performing its operations on the data. The results are then written back to the cluster. It is an effective way of processing large, static datasets. Spark, on the other hand, is a general purpose distributed data processing engine that processes data in parallel across a cluster. It performs real-time and graph processing of data.

Performance

– Hadoop MapReduce is relatively slower as it performs operations on the disk and it cannot deliver near real-time analytics from the data. Spark, on the other hand, is designed in such a way that it transforms data in-memory and not in disk I/O, which in turns reduces the processing time. Spark is actually 100 times faster in-memory and 10 times faster on disk. Unlike MapReduce, it can deal with real-time processing.

Cost

– Hadoop runs at a lower cost as it is open-source software and it requires more memory on disk which is relatively an inexpensive commodity. Spark requires more RAM which means setting up Spark clusters can be more expensive. Moreover, Spark is relatively new, so experts in Spark are rare finds and more costly.

Fault Tolerance

– MapReduce is strictly disk-based means it uses persistent storage. While both provide some level of handling failures, the fault tolerance of Spark is based mainly upon its RDD (Resilient Distributed Datasets) operations. RDD is the building block of Apache Spark. Hadoop is naturally fault tolerant because it’s designed to replicate data across several nodes.

Ease of Use

– MapReduce does not have an interactive mode and is quite complex. It needs to handle low level APIs to process the data, which requires lots of coding, and coding requires knowledge of the data structures involved. Spark is engineered from the bottom up for performance and ease of use, which comes from its general programming model. Also, the parallel programs look very much like sequential programs, making them easier to develop.

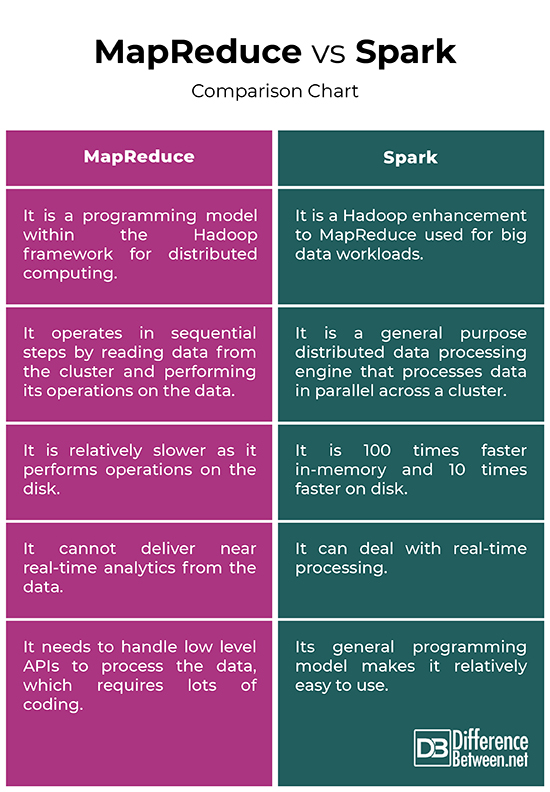

MapReduce vs. Spark: Comparison Chart

Summary

The main difference between the two frameworks is that MapReduce processes data on disk whereas Spark processes and retains data in memory for subsequent steps. As a result, Spark is 100 times faster in-memory and 10 times faster on disk than MapReduce. Hadoop uses the MapReduce to process data, while Spark uses resilient distributed datasets (RDDs). Spark is a Hadoop enhancement of MapReduce for processing big data. While MapReduce is still used for large scale data analysis, Spark has become the go-to processing framework in Hadoop environments.

Why Spark is faster than MapReduce?

Spark processes and retains data in memory for subsequent steps, which makes it 100 times faster for data in RAM and up to 10 times faster for data in storage. Its RDDs enable multiple map operations in memory, while MapReduce has to write interim results onto a disk.

What are the differences between Spark and MapReduce name at least two points?

First, MapReduce cannot deliver near real-time analytics from the data, while Spark can deal with real time processing of data. And second, MapReduce operates in sequential steps whereas Spark processes data in parallel across a cluster.

Is Spark more advanced than MapReduce?

Spark is widely considered the successor to the MapReduce framework for processing big data. In fact, Spark is one of the most active open-source projects in the Hadoop ecosystem and one of the hottest technologies in big data analysis today.

Does Spark need MapReduce?

Spark does not use or need MapReduce, but only the idea of it and not the exact implementation.

- Difference Between Caucus and Primary - June 18, 2024

- Difference Between PPO and POS - May 30, 2024

- Difference Between RFID and NFC - May 28, 2024

Search DifferenceBetween.net :

Leave a Response

References :

[0]Alapati, Sam R. Expert Hadoop Administration: Managing, Tuning, and Securing Spark, YARN, and HDFS. Massachusetts, United States: Addison-Wesley, 2016. Print

[1]Kane, Frank. Frank Kane's Taming Big Data with Apache Spark and Python. Birmingham, United Kingdom: Packt Publishing, 2017. Print

[2]Jeyaraj, Rathinaraja, et al. Big Data with Hadoop MapReduce: A Classroom Approach. Florida, United States: CRC Press, 2020. Print

[3]Perrin, Jean-Georges. Spark in Action: Covers Apache Spark 3 with Examples in Java, Python, and Scala. New York, United States: Simon and Schuster, 2020. Print