Difference Between ORC and Parquet

Both ORC and Parquet are popular open-source columnar file storage formats in the Hadoop ecosystem and they are quite similar in terms of efficiency and speed, and above all, they are designed to speed up big data analytics workloads. Working with ORC files is just as simple as working with Parquet files in that they offer efficient read and write capabilities over their row-based counterparts. Both have their fair share of pros and cons, and it is tough to figure out which one’s better than the other. Let’s take a better look at each of them. We’ll start with ORC first, then move to Parquet.

ORC

ORC, short for Optimized Row Columnar, is a free and open-source columnar storage format designed for Hadoop workloads. As the name suggests, ORC is a self-describing, optimized file format that stores data in columns which enables users to read and decompress just the pieces they need. It is a successor to the traditional Record Columnar File (RCFile) format designed to overcome limitations of other Hive file formats. It takes significantly less time to access data and also reduces the size of the data up to 75 percent. ORC provides a more efficient and better way to store data to be accessed through SQL-on-Hadoop solutions such as Hive using Tez. ORC provides many advantages over other Hive file formats such as high data compression, faster performance, predictive push down feature, and more over, the stored data is organized into stripes, which enable large, efficient reads from HDFS.

Parquet

Parquet is yet another open-source column-oriented file format in the Hadoop ecosystem backed by Cloudera, in collaboration with Twitter. Parquet is very popular among the big data practitioners because it provides a plethora of storage optimizations, particularly in analytics workloads. Like ORC, Parquet provides columnar compressions saving you a great deal of storage space while allowing you to read individual columns instead of reading complete files. It provides significant advantages in performance and storage requirements with respect to traditional storage solutions. It is more efficient at doing data IO style operations and it is very flexible when it comes to supporting a complex nested data structure. In fact, it is particularly designed keeping nested data structures in mind. Parquet is also a better file format in reducing storage costs and speeding up the reading step when it comes to large sets of data. Parquet works really well with Apache Spark. In fact, it is the default file format for writing and reading data in Spark.

Difference between ORC and Parquet

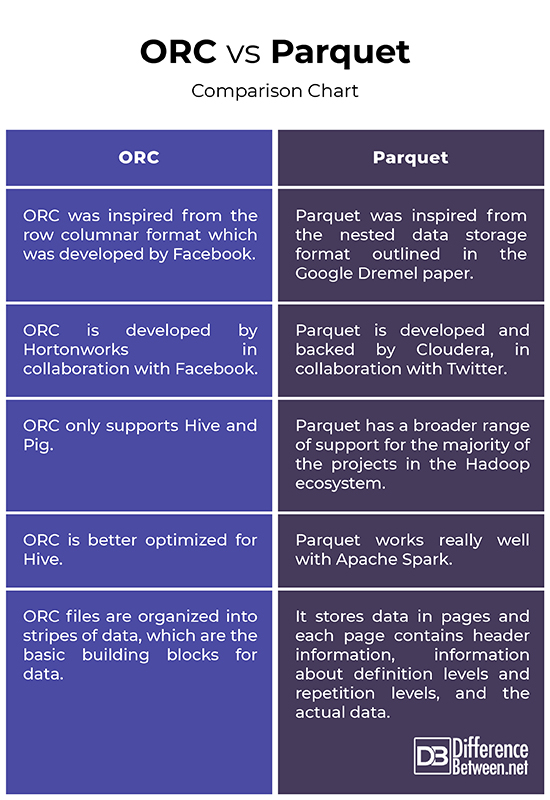

Origin

– ORC was inspired from the row columnar format which was developed by Facebook to support columnar reads, predictive pushdown and lazy reads. It is a successor to the traditional Record Columnar File (RCFile) format and provides a more efficient way to store relational data than the RCFile, reducing the size of the data by up to 75 percent. Parquet, on the other hand, was inspired from the nested data storage format outlined in the Google Dremel paper and developed by Cloudera, in collaboration with Twitter. Parquet is now an Apache incubator project.

Support

– Both ORC and Parquet are popular column-oriented big data file formats that share almost a similar design in that both share data in columns. While Parquet has a much broader range of support for the majority of the projects in the Hadoop ecosystem, ORC only supports Hive and Pig. One key difference between the two is that ORC is better optimized for Hive, whereas Parquet works really well with Apache Spark. In fact, Parquet is the default file format for writing and reading data in Apache Spark.

Indexing

– Working with ORC files is just as simple as working with Parquet files. Both are great for read-heavy workloads. However, ORC files are organized into stripes of data, which are the basic building blocks for data and are independent of each other. Each stripe has index, row data and footer. The footer is where the key statistics for each column within a stripe such as count, min, max, and sum are cached. Parquet, on the other hand, stores data in pages and each page contains header information, information about definition levels and repetition levels, and the actual data.

ORC vs. Parquet: Comparison Chart

Summary

Both ORC and Parquet are two of the most popular open-source column-oriented file storage formats in the Hadoop ecosystem designed to work well with data analytics workloads. Parquet was developed by Cloudera and Twitter together to tackle the issues with storing large data sets with high columns. ORC is the successor to the traditional RCFile specification and the data stored in the ORC file format is organized into stripes, which are highly optimized for HDFS read operations. Parquet, on the other hand, is a better choice in terms of adaptability if you’re using several tools in the Hadoop ecosystem. Parquet is better optimized for use With Apache Spark, whereas ORC is optimized for Hive. But for the most part, both are quite similar with no significant differences between the two.

- Difference Between Caucus and Primary - June 18, 2024

- Difference Between PPO and POS - May 30, 2024

- Difference Between RFID and NFC - May 28, 2024

Search DifferenceBetween.net :

Leave a Response

References :

[0]Chambers, Bill and Matei Zaharia. Spark: The Definitive Guide: Big Data Processing Made Simple. California, United States: O'Reilly Media, 2018. Print

[1]Aven, Jeffrey. Sams Teach Yourself Hadoop in 24 Hours. Indiana, United States: Sams Publishing, 2017. Print

[2]Karambelkar, Hrishikesh Vijay. Apache Hadoop 3 Quick Start Guide. Birmingham, United Kingdom: Packt Publishing, 2018. Print

[3]Luu, Hien. Beginning Apache Spark 2: With Resilient Distributed Datasets, Spark SQL, Structured Streaming and Spark Machine Learning library. New York, United States: Apress, 2018. Print

[4]Sankar, Krishna. Fast Data Processing with Spark 2. Birmingham, United Kingdom: Packt Publishing, 2016. Print

[5]Image credit: https://commons.wikimedia.org/wiki/File:Freedomstd_orc-vd-cli.png

[6]Image credit: https://commons.wikimedia.org/wiki/File:ORC_screenshot.png